The world of Artificial Intelligence is moving at a breathtaking pace. Large Language Models (LLMs) like GPT-4 have demonstrated remarkable abilities to generate human-like text. However, anyone who has used them extensively knows their critical weakness: they can hallucinate, presenting outdated or entirely fabricated information as fact. They are knowledge-bound by their training data, making them unreliable for tasks requiring specific, up-to-date, or proprietary information.

What if you could combine the creative power of an LLM with the precision of a search engine? This is the promise of Retrieval-Augmented Generation (RAG). And while the concept of RAG is powerful, implementing it from scratch is complex. This is where RAGFlow comes in.

This beginner’s guide will demystify RAGFlow, walking you through what it is, why it’s a game-changer, and how you can get your own RAG system up and running.

Table of Contents

What is Retrieval-Augmented Generation (RAG)? A Quick Primer

Before we dive into RAGFlow, it’s essential to understand the foundational technology it’s built upon: Retrieval-Augmented Generation.

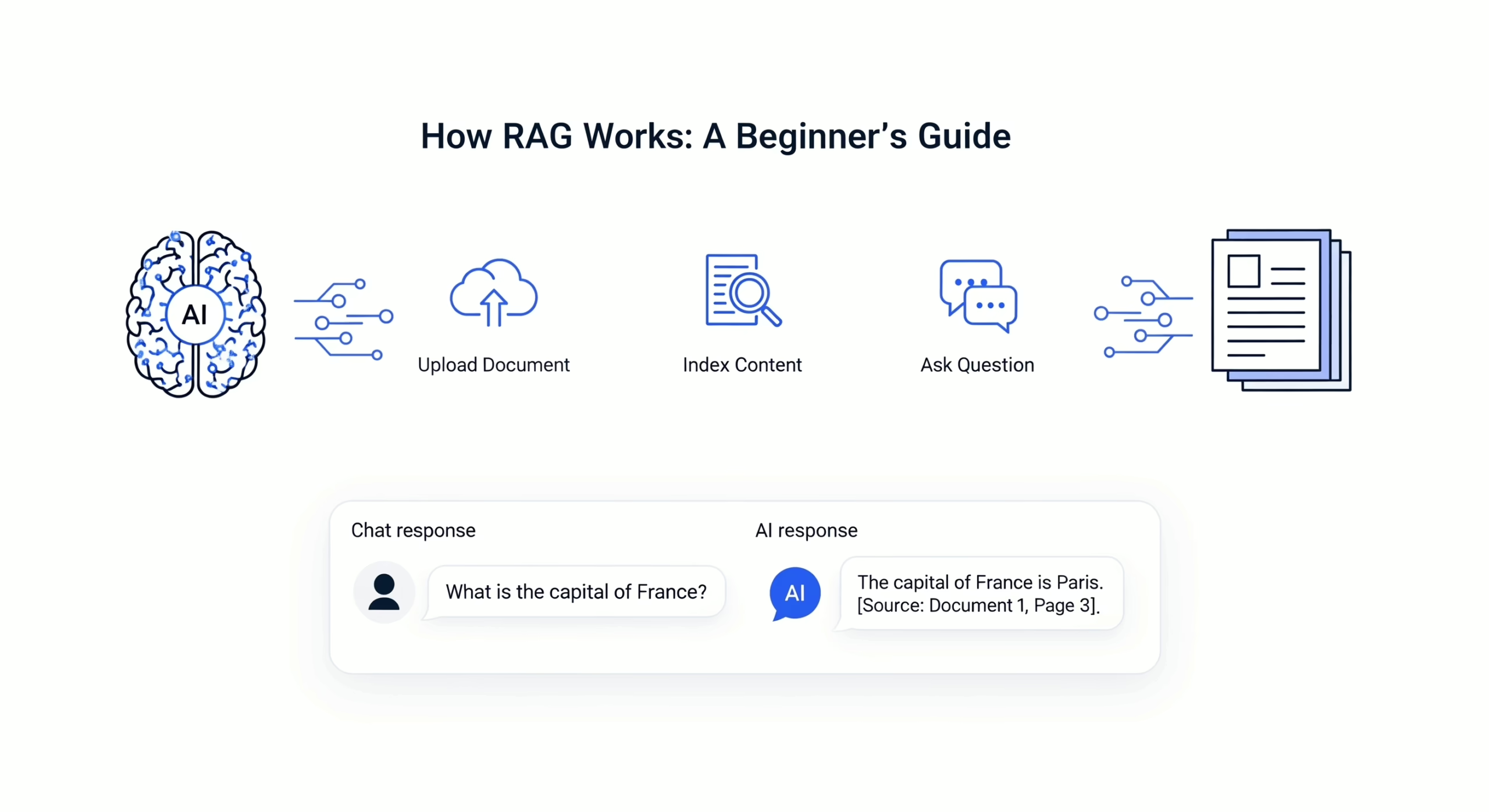

In simple terms, RAG is a hybrid AI framework that works in two distinct phases:

- Retrieval: When a user asks a question (queries), the system first searches a designated knowledge base (like your company’s documents, a database, or a set of PDFs) to find relevant, factual information.

- Augmented Generation: The system then feeds both the user’s original question and the retrieved, relevant information to a Large Language Model. The LLM uses this grounded context to generate a highly accurate, contextual, and sourced answer.

Think of it like a supremely efficient research assistant. Instead of an LLM relying on its possibly outdated or generalized memory, it’s given a stack of the most relevant reports and books to base its answer on. This leads to responses that are:

- More accurate and factually correct.

- Based on your specific data (reducing “hallucinations”).

- Traceable, as you can often see the source documents used.

What is RAGFlow?

So, where does RAGFlow fit in? RAGFlow is an open-source RAG engine that provides a streamlined, user-friendly platform to build and deploy your own RAG systems. Developed by Infinigence, it abstracts away the immense complexity of building a RAG pipeline from zero.

Instead of wrestling with vector databases, embedding models, chunking strategies, and API integrations, RAGFlow offers a cohesive environment where you can focus on what matters: your data and the answers you need.

It’s not just a wrapper for an LLM; it’s a complete, feature-rich application designed to make advanced RAG accessible to developers, data scientists, and even tech-savvy business users.

Key Features and Benefits of Using RAGFlow

Why should you choose RAGFlow over building your own system or using another tool? Here are its standout features:

- Deep Document Understanding: RAGFlow goes beyond simple text extraction. It can parse complex files like PDFs, PPTs, and Word documents while understanding layouts, tables, and figures, preserving critical structural information.

- Automatic Text Chunking: It intelligently breaks down documents into semantically meaningful chunks, which is crucial for accurate retrieval. You can customize chunking strategies based on your data.

- Visualized RAG Pipeline: One of its most lauded features is a user interface that lets you visually trace and debug the entire RAG process—from the original query to the retrieved text chunks to the final generated answer.

- Support for Multiple LLMs: It is not locked into a single model. RAGFlow supports various open-source and proprietary LLMs, including OpenAI GPT, Claude, and local models via Ollama or vLLM, giving you flexibility and cost control.

- Open-Source Advantage: Being open-source means it’s free to use, highly customizable, and backed by a community that continuously improves it.

How to Install and Set Up RAGFlow

Getting RAGFlow running on your local machine is straightforward, thanks to Docker. This guide will use the Docker method, which is the recommended approach to avoid dependency conflicts.

Prerequisites

Before you begin, ensure you have the following installed on your system:

- Docker: The containerization platform that will run the RAGFlow server. You can download it from the official Docker website.

- Docker Compose: A tool for defining and running multi-container Docker applications. It usually comes bundled with Docker Desktop.

- A Modern Web Browser: Such as Chrome, Firefox, or Edge.

Step-by-Step Installation Guide

We’ll use Docker Compose to spin up RAGFlow along with its required dependencies, like a database and vector store.

- Create a Project Directory: Open your terminal or command prompt and create a new directory for your RAGFlow project.

mkdir ragflow-project cd ragflow-project - Download the Docker Compose File: Fetch the official

docker-compose.ymlfile from the RAGFlow repository.curl -o docker-compose.yml https://raw.githubusercontent.com/infiniflow/ragflow/main/docker/standalone/docker-compose.yml - Launch the Containers: Run Docker Compose to pull the necessary images and start the services. This may take a few minutes on the first run.

docker-compose up -dThe-dflag runs the containers in “detached” mode, meaning they will run in the background. - Verify the Installation: Check that all containers are running correctly.

docker psYou should see at least two containers: one forragflowand another for its database (likelymysql). - Access the RAGFlow Web Interface: Once the containers are running, open your web browser and navigate to

http://localhost:9380. You should see the RAGFlow login page. - Log In: The default credentials are:

- Username:

admin - Password:

admin

- Username:

Congratulations! You have successfully installed RAGFlow. You will be greeted by the dashboard, which is your central hub for creating knowledge bases and interacting with your RAG system.

Creating Your First Knowledge Base in RAGFlow

A “Knowledge Base” is the core of your RAG system in RAGFlow. It’s the repository where you upload your documents, and it handles the entire backend process of chunking, embedding, and indexing.

Step 1: Create a New Knowledge Base

- From the dashboard, click on “Knowledge Base” in the left-hand sidebar.

- Click the blue “+ Create” button.

- Give your knowledge base a descriptive name (e.g., “Company HR Handbook”) and an optional description.

Step 2: Configure LLM and Embedding Models

This is a critical step where you define the “brains” of your operation.

- LLM Provider: Choose from the dropdown. For beginners, if you have an OpenAI API key, selecting “OpenAI” is a good start. For a fully local and free option, you can set up “Ollama” on your machine and select it here.

- Embedding Model: This model converts your text chunks into numerical vectors. RAGFlow provides several options.

BAAI/bge-large-en-v1.5is a popular, high-performing open-source choice for English text.

Step 3: Define Your Chunking Strategy

How your documents are split is vital for retrieval quality. RAGFlow offers two main types:

- Auto Chunking: The system automatically decides the chunk size and overlap. This is a good starting point.

- Manual Chunking (Recommended for Advanced Use): You can specify:

- Chunk Size: The number of tokens or characters per chunk. 512 is a common starting point.

- Chunk Overlap: The number of tokens/characters that consecutive chunks share. A small overlap (e.g., 50) helps preserve context across chunks.

For your first test, “Auto” is perfectly fine.

Step 4: Upload Your Documents

Now for the fun part—feeding your knowledge base!

- Click into your newly created Knowledge Base.

- Go to the “Files” tab and click “Upload File.”

- Drag and drop or select files from your computer. RAGFlow supports a wide range of formats:

- Text: TXT, MD

- Documents: PDF, DOCX, PPTX

- Data: CSV

- Images: JPG, PNG (with OCR capabilities)

Once uploaded, RAGFlow will automatically begin the processing pipeline: parsing, chunking, creating embeddings, and indexing them into its vector database. You can monitor the progress in the interface.

Querying Your Knowledge Base: Putting RAG to the Test

After your documents have been processed (you’ll see a status like “Completed”), your RAG system is live and ready to answer questions.

- Navigate to the “Chat” window within your Knowledge Base.

- In the chat input box, ask a question that is directly answered by the documents you uploaded.

For example, if you uploaded your company’s HR policy, you could ask: “How many paid vacation days do employees get in their first year?”

Understanding the Results: The Power of Visualization

This is where RAGFlow truly shines. The answer will appear in the chat bubble. But more importantly, look for the “Retrieval” tab or a “Source” button.

Clicking on this will show you:

- The exact text chunks from your documents that were retrieved to answer the question.

- A relevance score for each chunk.

- The specific document and page number the chunk came from.

This transparency allows you to verify the answer’s accuracy and debug why certain information was or wasn’t retrieved. It builds trust in the system’s output.

Best Practices for Optimal RAGFlow Performance

To get the most out of RAGFlow, follow these best practices:

- Start with High-Quality Data: The principle of “garbage in, garbage out” is paramount. Ensure your source documents are clean, well-formatted, and contain the accurate information you want the system to use.

- Experiment with Chunking: The default chunking is good, but not perfect for all use cases. For long, structured documents, a smaller chunk size might yield more precise results. For conceptual questions, a larger chunk might be better. Use the retrieval visualization to test and refine.

- Use a Suitable LLM: The choice of LLM matters. For simple Q&A, a smaller, cheaper model might suffice. For complex synthesis and reasoning, a more powerful model like GPT-4 will perform significantly better.

- Implement a “Hybrid Search”: RAGFlow supports hybrid search, which combines traditional keyword-based search with semantic (vector) search. This can be more robust, as it can find relevant information even if the exact phrasing differs.

- Secure Your Deployment: The default installation is for development. Before deploying to production, change the default passwords, secure your Docker environment, and consider network security.

Real-World Use Cases for RAGFlow

RAGFlow’s flexibility makes it applicable across numerous domains:

- Enterprise Internal Help Desks: Create a chatbot that answers employee questions about IT policies, HR benefits, or internal processes by feeding it company handbooks and guides.

- Customer Support: Build a support agent that provides accurate, instant answers from product manuals, knowledge bases, and support tickets, reducing the load on human agents.

- Legal and Compliance: Allow lawyers and compliance officers to quickly query a vast library of contracts, case law, or regulatory documents.

- Academic Research: Researchers can upload dozens of papers and ask specific questions about methodologies or findings, effectively creating an interactive literature review tool.

- Content Creation and Management: Content teams can use it to ensure brand consistency by querying style guides, past campaign data, and brand bibles.

Conclusion: Your Gateway to Reliable AI

RAGFlow successfully demystifies and democratizes Retrieval-Augmented Generation. It transforms a complex architectural pattern into a tangible, usable tool that anyone can deploy to create powerful, context-aware AI applications grounded in their own truth.

By following this guide, you’ve taken the first crucial steps. You’ve installed RAGFlow, created a knowledge base, and witnessed firsthand how it delivers accurate, verifiable answers. The journey from here involves experimentation—refining your chunking strategies, testing different LLMs, and feeding it more diverse datasets. The potential to build intelligent, trustworthy, and proprietary AI systems is now at your fingertips. Dive in, explore, and start building the future of informed AI.